Our research covers optics, signal processing, optimization, and machine learning to design and optimize the hardware and software of computational imaging systems. |

|

Information theory for imaging system designWe apply information theory, a mathematical framework originally designed for noisy communication systems, to imaging systems, which “communicate” information about the objects being imaged. Check out the project page for more information. | |

Space-time methods for dynamic reconstruction

|

|

Machine learning for computational imagingMachine learning and neural networks are powerful tools for optimizing both the hardware and algorithms of computational imaging systems. We focus mainly on physics-based machine learning, which incorporates what we know about how the physics of a system works instead of relying only on large training datasets. | |

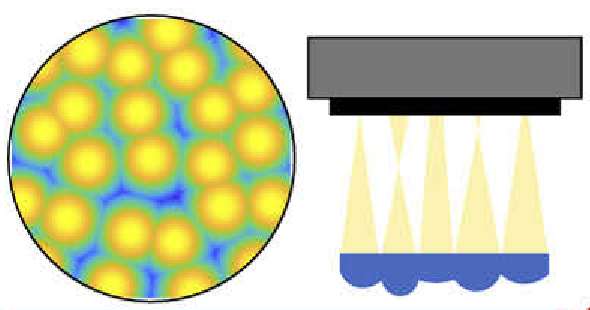

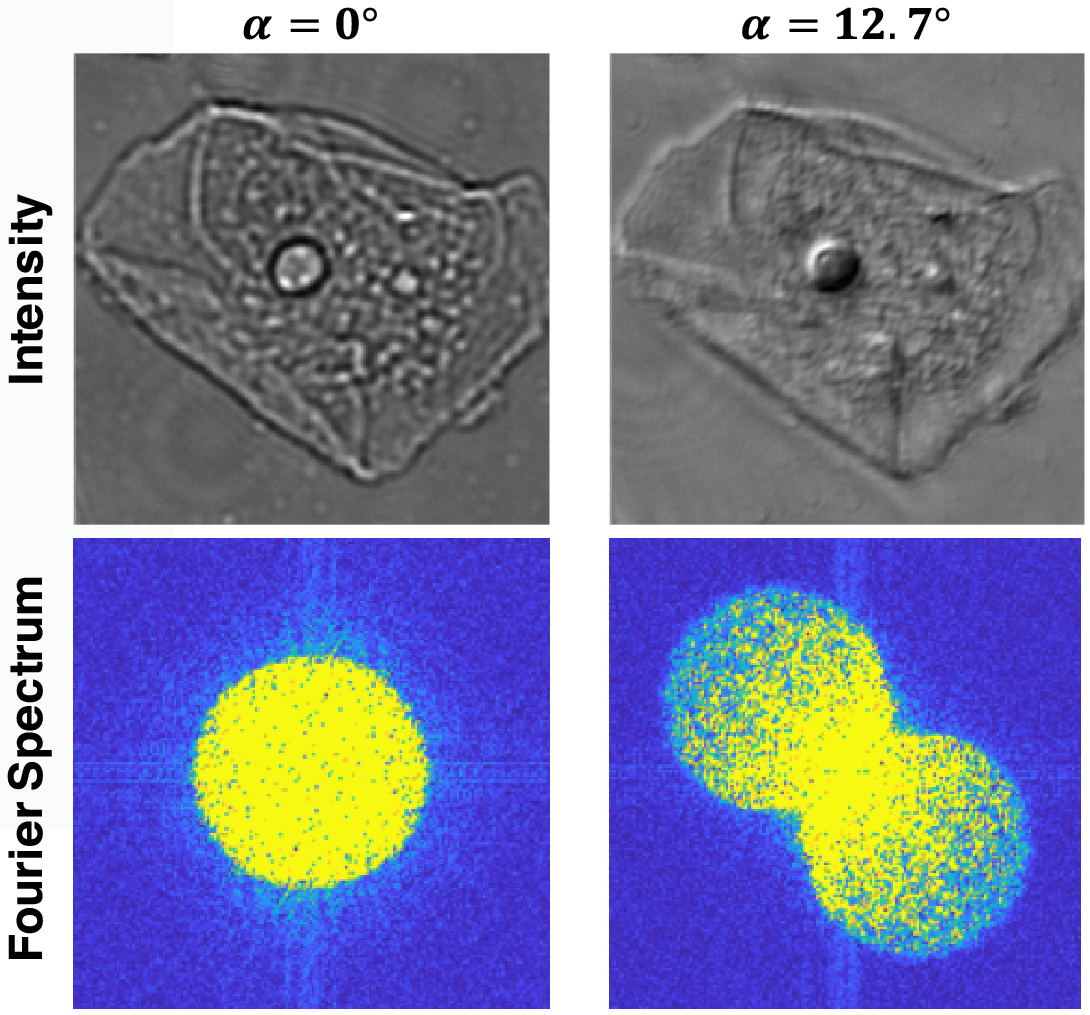

End-to-end design of optics and algorithmsThrough the magic of gradient descent, we can directly optimize the design of optical components in an imaging system jointly with its reconstruction algorithm. All we need is a differentiable forward model of how the image is formed and a training dataset. We applied this to learn LED array patterns for Fourier ptychography (left). And phase mask design for single-shot 3D microscopy (right). |

|

Data-driven adaptive microscopy

|

|

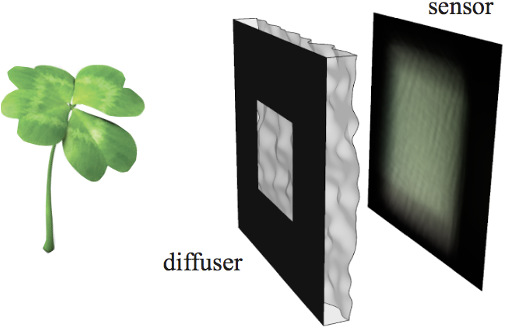

Lensless imaging and DiffuserCam

|

|

Lensless single-shot video and hyperspectral imaging

|

|

|

|

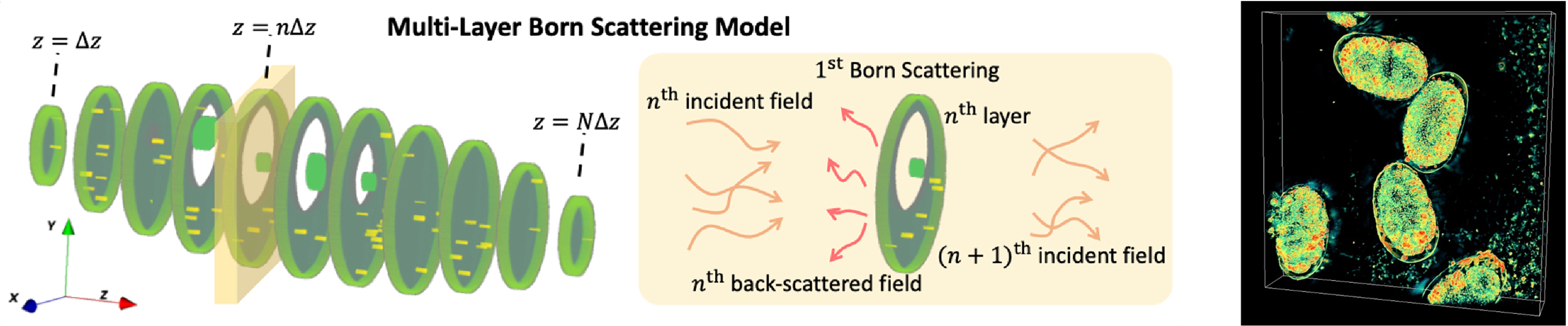

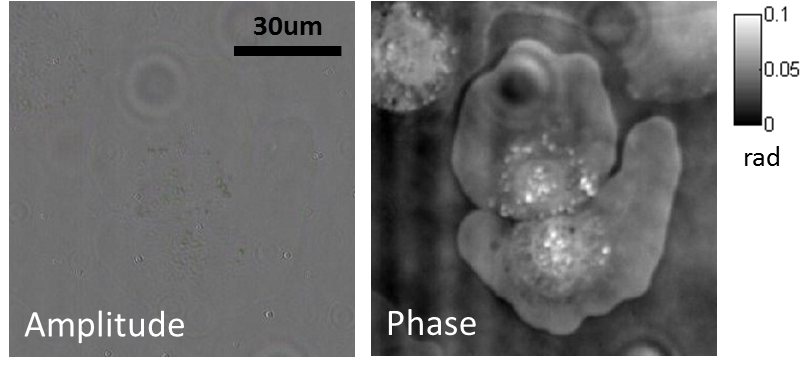

Computational imaging at non-visible wavelengths: electron microscopy, X-ray, EUVWhile many systems we work on are at visible wavelengths, the same principles can be applied to other imaging systems, expanding our reach to new scientific domains and other applications. To work on these systems that we don’t build ourselves, we rely on close collaborations with institutions like the Lawrence Berkeley National Lab. Electron microscopy for materials scienceElectron microscopy can achieve resolution on the scale of individual atoms, allowing for precise determination of the atomic structure of unknown materials. Multiple scattering models for highly scattering samples (right): D. Ren, C. Ophus, M. Chen, and L. Waller Ultramicroscopy (2020).

Space-time reconstruction for unwanted dynamics: T. Chien, C. Ophus, and L. Waller NeurIPS Workshop on Deep Learning and Inverse Problems (2023).

X-ray and Extreme ultraviolet (EUV) for microscopy and lithographyMany applications are pushing towards shorter wavelengths because they allow for higher resolution, e.g. in lithography, EUV can pattern semiconductors at higher density. However, these systems pose significant engineering challenges in error tolerance, aberration correction, physical constraints to optical design, and more. |

|

Imaging through scatteringMost microscopy relies on a single-scattering assumption: light traveling through the sample bounces off or interacts with just one part of the sample before being imaged onto the camera. This assumption breaks down if we try to image deeper into thicker and more three-dimensional samples, making it much more challenging to image deep into the brain and other biological tissues. More accurate multiple scattering models: 3D phase imaging of multiple-scattering samples (above right): S. Chowdhury, M. Chen, R. Eckert, R. Ren, F. Wu, N. Repina, and L. Waller Optica (2019).

Combining 3D phase imaging with fluorescence to correct aberrations and scattering: Y. Xue, D. Ren, and L. Waller Biomedical Optics Express (2022).

For optogenetics: Y. Xue, L. Waller, H. Adesnik, and N. Pegard eLife (2022).

|

|

| |

|

|

LED array microscopeWe work on a new type of microscope hack, where the lamp of a regular microscope is replaced with an LED array, allowing many new capabilities. We do brightfield, darkfield, phase contrast, super-resolution or 3D phase imaging, all by computational illumination tricks. L. Tian, X. Li, K. Ramchandran, and L. Waller, Biomedical Optics Express (2014). We used this system to create the Berkeley Single Cell Computational Microscopy (BSCCM) dataset (right), which contains over 12,000,000 images of 400,000 of individual white blood cells under different LED array illumination patterns and paired with a variety of fluorescence measurements. H. Pinkard, C. Liu, F. Nyatigo, D. Fletcher, and L. Waller (2024). |

|

Funding: